# 11/03/21 - How To Spot A Thylacine (A Meandering Odyssey Through Post-Truthism, Reality TV, Food Pyramids, UFOlogists, Deep Fakes and Complexity Theory)

The more you know, the more you realise you don't know - Aristotle

All I know is that I don't know nuthin - Operation Ivy

# Introduction (We Found a Thylacine)

For a brief 6 hour period last week, I was captivated by rumours that a family of Thylacines (or Tasmanian Tigers) had been spotted in the North-East of Tasmania. If verified, this discovery would have reversed the official classification of the species as extinct, but it didn't take long for the Tasmanian Museum and Art Gallery to pour cold water on the whole thing. This episode, though trivial, did cause me to consider the nature of belief and what it would take for me (a naturally incredulous person) to be swept up in a conspiracy theory. For years now (usually in response to an apparently existential event like Brexit or the election of Donald Trump), we have heard those in the "sensible centre" lament the death of objective truth, and while it does appear that facts are increasingly up for grabs in our polarised world, this trend did not materialise in a vacuum, nor can it be explained away as "stupidity". This phenomenon has intelligible roots and a historical context which once understood can illuminate, and even aid in navigating our "Post-Truth" landscape.

The Thylacine was a marsupial closely related to the Tasmanian Devil that originally lived in New Guinea and Australia. By the time the British arrived, it was found solely on the island of Tasmania, but by 1936 the species had been hunted into extinction. Or at least that's what the "experts" say... On the other side of the debate, you have a sizeable cohort of believers who maintain that the elusive animal is still out there, including Neil Waters, who is shown below claiming the most recent discovery.

Now sure Waters does look a bit dishevelled, and yes he is drinking a beer, and absolutely there were naysayers on Twitter pointing to all the times his organisation (Thylacine Awareness Group of Australia) had erroneously made similar declarations, but there were some legitimate reasons to take his claims seriously. The most compelling evidence in support of the ongoing existence of Thylacines came from a recent paper Extinction of the Thylacine (opens new window). This article, which admittedly is yet to be peer-reviewed, collates and characterises the nature of thousands of unconfirmed sightings since 1910 and uses this data to reconstruct a mapping of the species' geospatial movements. Using this methodology, the authors conclude that the true date of extinction probably lies in the 1990s or early 2000s and posit that it is possible (though improbable) that the species has survived till the present day. Also encouraging were the following facts:

- The Thylacine was an apex predator (so had no natural enemies).

- The Thylacine was nocturnal and shy (which could explain the lack of human contact).

- Very few camera traps have been deployed in Tasmania (these are used commonly to find rare and endangered animals).

After reading the aforementioned paper and skimming a few articles, I found myself in a familiar and distinctly modern position: I was implicitly being asked to come to a conclusion on a subject I do not, and simply can not understand. In this case, the stakes were insignificantly low, but the Thylacine question is emblematic of so many dilemmas we face in contemporary society and is part of a broader phenomenon, sometimes described as "Post-Truthism". Often this term is used pejoratively to diminish political opponents, but in this article, I will earnestly explore the nature of our fact-free existence and try to explain how we got here.

# Information, Chaos and Complexity

Today's world is comprised of increasingly chaotic and complex systems and humans are devastatingly ill-equipped to process the fire-hose of information we are confronted with. You have probably heard some version of this seemingly bromidic statement before, but the precise definitions of the terminology involved are often overlooked. Information, Chaos and Complexity are subjects worthy of whole books (and many have been written) but in this section, I will try to provide an overview of what these words refer to in a technical context.

# Entropy and Information

To understand information we first must start with entropy. Entropy is a concept that originally came out of thermodynamics but has been applied in many diverse fields and can be thought of as a measure of disorder or randomness. Central to this discussion is the second law of thermodynamics which states that the entropy of a system will never decrease spontaneously. That is to say, the system will always tend towards disorder unless work (energy) is done.

To illustrate this further, consider two cups; one containing a blue liquid and one containing a red liquid. Now if we were to pour the blue cup into the red cup what will we see (for this example let's assume there is no chemical reaction between the liquids - only mixing)? Well initially the entropy of the system is low as the red molecules are mostly separate from the blue molecules, but with time the two colours begin to mix and eventually we will be left with a homogenous purple liquid (the red and blue molecules are now thoroughly mixed and the entropy of the system has increased). So far the system has behaved as expected according to the second law of thermodynamics, but let us imagine what a breach of this law (a decrease in entropy) would look like. For the entropy to decrease we would need the molecules to become more ordered, and while there is no physical reason why all of the red molecules couldn't move to the left side of the cup and all the blue molecules to the right through random motion, this is not a process we ever observe.

The link between entropy and information came through a thought experiment by British physicist James Clerk Maxwell known as "Maxwell's Demon". Maxwell described a box split into two chambers and connected by a massless and frictionless door. In the box is a demon who observes the velocity of air molecules and opens the door to allow fast ones into the right chamber and slow ones into the left. He is also careful to close the door to stop fast molecules from leaving the right chamber and slow molecules from leaving the left. After a while, the demon will have organised the box so that the right side is hot and the left side is cold and therefore the entropy of the system will have decreased (apparently breaking the second law of thermodynamics). The solution to this paradox came 60 years later through Hungarian physicist Leo Szilard, who argued that the demon's act of observing and measuring the speed of each molecule requires energy, and as a result, must produce more entropy in the system than the decrease through sorting. In solving this problem, Szilard was the first to quantify information as a binary digit or "bit" (in this case the speed of a molecule encoded as either 1 for fast or 0 for slow) and linked thermodynamics and Information Theory.

Finally, we get to the father of Information Theory, Claude Shannon, whose work focused on communication and was inspired by Szilard. What Shannon developed was the concept of "Information Entropy" as a measure of the content in a message, which is often loosely characterised as the amount of "surprise" a receiver gets from a message. For example, the amount of information in a fair coin flip is 1 bit (it can be encoded in a single binary digit of 0 or 1) but the information in a fair dice roll is 2.585 bits. Intuitively this should make sense because a message that a coin has come up Heads is less "surprising" than a message that a dice roll has come up with a 4 (since there are only 2 equally likely outcomes in the former as opposed to 6).

These are dense topics and I have had to skim over them, but my primary aim here is to dispel the notion that information is some vague, nebulous term. In fact, it has a precise definition rooted in the laws of physics and is measurable. Now if information is tangible and quantifiable, and the energy required to observe and internalise information is non-zero (as suggested by Maxwell's Demon), then it follows that humans must have a maximum processing capacity. Some have even attempted to quantify this capacity (e.g. Riener writes in Emotions and Affect in Human Factors and Human-Computer Interaction that "Human information processing has an essential bottleneck. More than 10 million bits/s arriving at our sensory organs but only a very limited portion of about 50 bits is receiving full attention.") but for our purposes, it is sufficient to recognise that this theoretical limit must exist.

# Chaos

Isaac Newton viewed the world as a giant mechanical machine, so much so that he even described his own theory of gravity (and the concept of action at a distance) as "absurd". This orthodoxy of a clockwork universe was prevalent until the late 19th century but was eventually crushed by curious research in a branch of nonlinear dynamics we now call Chaos Theory. What this research showed was that in some deterministic systems, tiny differences in initial conditions will lead to wildly divergent outcomes, or as put by Edward Lorenz, chaos is "when the present determines the future, but the approximate present does not approximately determine the future". One of the earliest discoveries of sensitivity to initial conditions related to the Three-Body Problem, which was a failed attempt to model the motion of three masses (most commonly the sun, earth and moon), but even today meteorologists are still unable to predict the weather with much accuracy, and that is because the weather is a chaotic system.

At first glance, it may seem as though sensitivity to initial conditions could be overcome by measuring these initial conditions with greater accuracy, but unfortunately, the reality is not so simple. Let us consider a chaotic system where one of the inputs is the current air temperature. Even ignoring thermometer inaccuracies, the observed temperature will be an approximation by necessity. If we have a good thermometer it may give a reading to two decimal places and if we have a great thermometer it may even show four, but what we actually need is a thermometer with infinite decimal places. Real numbers can not be measured or represented with infinite precision, and therefore we can not escape the intrinsic unpredictability of chaotic systems.

# Complexity

A related concept to chaos is complexity. Not all chaotic systems are complex and not all complex systems are chaotic, but both are difficult to model. The term complex is often used interchangeably with the word complicated, but in reality, these are distinct, though overlapping, descriptors. While a complicated system may be intricate or elaborate, complex systems have some specific properties:

- Networks: Complex systems are large networks of relatively simple components with no central control.

- Emergent Behaviour: In complex systems, complex behaviour emerges from the simple behaviour of components.

- Information Processing: Information is ingested, processed and output by the system.

Many of the systems that we participate in, are governed by and are comprised of turn out to be complex (e.g. the human brain, financial markets, the internet, culture etc.) which implies that much of the emergent behaviour of these systems can not be well understood as a function of components. For example, economic modelling is notoriously inaccurate, but we should not expect to be able to predict market crashes by looking at the behaviour or individual consumers any more than we should expect to understand the thoughts of a person by mapping the firing of individual neurons in their brain.

If, ironically, all this talk of entropy and emergent behaviour was too much to take in, here is my Cliff Notes version:

We can not comprehend the present and even if we could, this would not allow us to predict the future.

# Why Does My Heart Feel So Bad?

It turns out that the vague dysphoric sense of being overwhelmed that most of us carry around is a perfectly natural response to our society of over-saturation. I have a theory that the prevalence of anxiety disorders in our age could be linked with our inability to parse this never-ending onslaught of information, and that this is expressed in the activities we turn to for relief. Perhaps the most conspicuous manifestation is in our insatiable appetite for drugs like alcohol, opiates and benzodiazepines, which empower us to tune out the noise of our day to day experience. There are also clues in the "mindless" entertainment that we consume. What better way to soothe our over-stimulated brains than to sink into the lounge and focus on stories with archetypal characters and familiar plots? Marvel movies aren't popular in spite of their stupidity, but because of it. But fiction can only take us so far. The holy grail of mental pacification is reality TV, a format in which the same reductive narratives and superficial characters exist, but in an enhanced package that allows us to believe (if only for an hour a week) that maybe this is what the world is really like.

To be clear, I do not mean to criticise those that indulge in these activities, because to some extent we all do. If anything, giving yourself over to the chaos is a more noble philosophy than the delusional asceticism of those that believe, not only that the world can be comprehended, but that they personally have managed it. While "Logic Bros" might get a kick out of mocking anti-vaxxers on Twitter and memorising Sam Harris quotes, I would argue that they vastly over-estimate their ability to "think critically". What thinking critically tends to look like in practice, is the recapitulation of ideas from YouTube (ironically a similar methodology to the folks they deride). This is not to say that all opinions are equally valid, and while in the previous section I argued that we can never say everything about a subject, it doesn't follow that we can't say anything. Some answers are better than others, some arguments are incoherent and many things we collectively believe are supported by enough evidence as to be uncontroversial, but avoid those who speak in absolutes (they tend to be insufferable anyway).

Sign Up To My Mailing List

Hey you are reading this thing! If you are enjoying the article then you should definitely subscribe below. I may be a bot but I will never spam you.

# Democracy in a Post-Truth World

Democracy is a system of government where the population make all legislative decisions (either directly or through representatives), but in a society where most of the issues being debated are literally impenetrable to voters, how effective can this form of law-making be? I can't imagine the Ancient Greeks voting in their early democracy were required to contend with inscrutable topics like dividend imputation credits or post-pandemic economic stimulus. To avoid the bulging scope of this article blowing out even further, I am going to ignore the role of culture and values in opinion-forming (I have written about this before) and will also avoid the flawed mechanics of government and the disenfranchising effects it produces (I have touched on this before too). The focus rather will be on how we come to agree upon facts and our process of surfacing evidence and making arguments.

# A Quick Digression on Proof

The first thing to say here is that you don't "prove" things in the real world, you can merely find evidence to support your claims. The idea of a "proof" exists only in the formal systems of mathematics, but even here we must initially make assumptions that we do not prove. In these systems, you begin with a set of axioms (or statements that we assume to be true) and build our body of knowledge piece by piece. To prove any statement within this system, it must be shown to follow logically from these initial axioms (at which point it becomes a theorem). Peano axioms, for example, relate to natural numbers and include:

- 0 is a natural number.

- For every natural number x, x = x.

- For all natural numbers x and y, if x = y, then y = x.

Notice that these axioms seem to be self-evident, but they are assumptions none the less. Just as an aside, we also know (due to Gödel's Incompleteness Theorem) that in any axiomatic system, there will be statements that are logically true, but which can not be proven from within that system 😱 .

# Is The Sky Really Falling?

To make this discussion more concrete, let's consider the example of public policy on climate change. All reasonable people believe that the climate is changing due to human activity but how is it that we all came to this view? One way to approach this would be to go back to first principles and try to understand the underlying science. In this case, luckily, the central hypothesis is not that hard to understand: Human activity produces vast quantities of carbon dioxide and other greenhouse gases, these gases have a heat-trapping property and therefore, in response to the increase in greenhouse gases, the Earth will warm. We understand the chemistry here but we also have plenty of empirical evidence to support the argument:

- Ice cores have been drawn from Antarctica (and other places) that show the climate has historically responded to variations in greenhouse gas levels.

- The global surface temperature has been increasing as predicted.

- Sea levels are rising (due to warming oceans and shrinking ice sheets).

- The number of extreme weather events is increasing as predicted.

Now the truth is, most people are not going to read all the research on climate change to form their view, and even those that do will not follow the science down every single rabbit hole. For example, I did some reading to summarise the scientific view above, but I did not look into the exact chemistry of how greenhouse gases trap carbon dioxide and I did not seek out the exact data that shows the global surface temperature is rising. And even if I did do all of that, I still would need to look into the methodology of how surface temperature is measured and research the quality of the equipment used. I would also need to research the bodies that record and aggregate these measurements and assess their motivations. The point is, no matter how much research you do, at some point you are going to need to take a leap of faith and trust your source of information. The issue of climate change is only one of myriad topics we engage with, and in fact, it is one of the least thorny (since the science is easily comprehended and there is consensus amongst experts). Even if we were inclined to dig into the exact mechanics of this subject, we simply do not have the capacity to do so on every issue.

# A Grossly Simplified Model For Decision Making

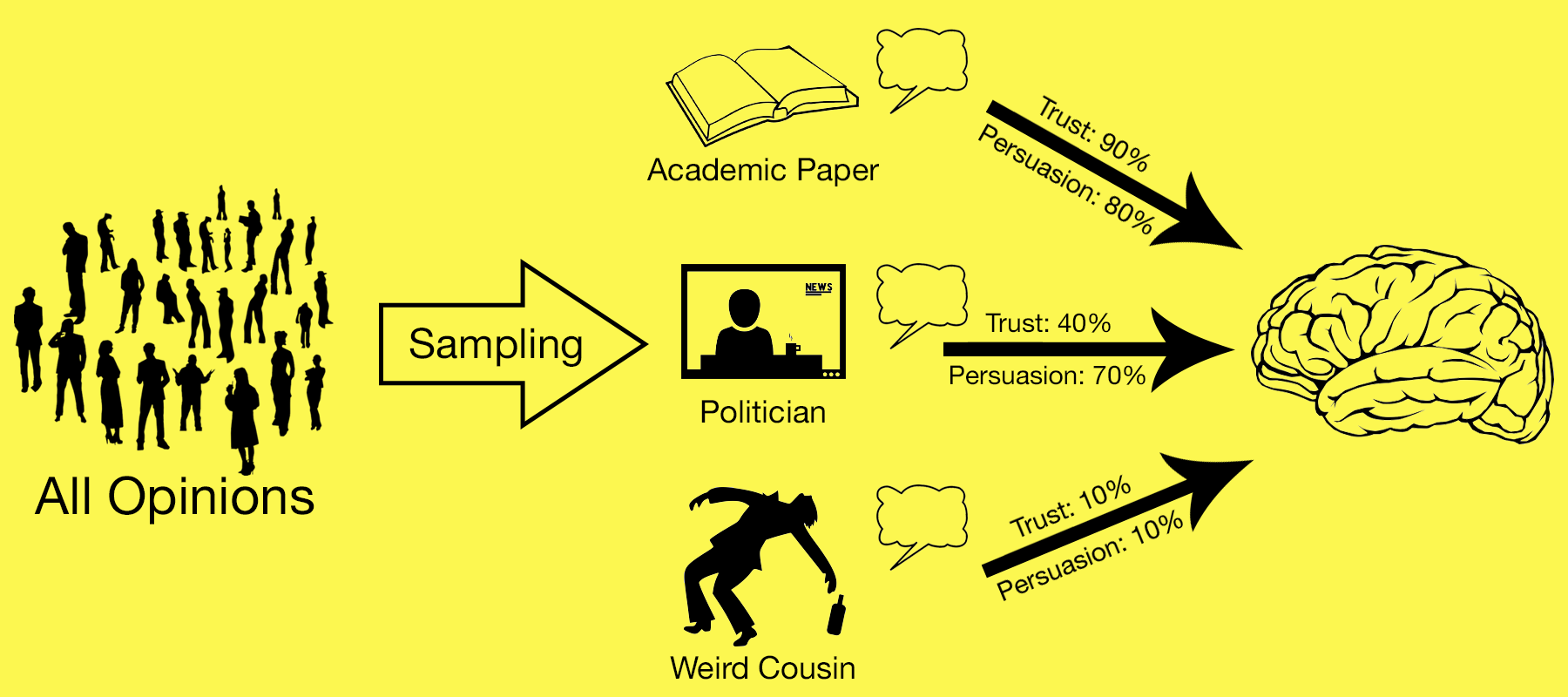

Let us dig a little deeper into the process of how we form our views. On any given topic, there are countless opinions and voices out there but we only get exposed to a tiny subset of these. We form our own opinion by aggregating this subset of views weighted by two factors:

- How much do we trust each source?

- How persuasive was each argument?

To go back to our climate change example, imagine you have only come across three opinions on the subject.

- You have read an academic paper in a peer-reviewed climatology journal that explains the science behind climate change and provides evidence for their claims.

- You saw a politician on television arguing that climate change may be real but it is not due to human activity. He pointed to the fact that there have been ice ages and other climatic shifts throughout the Earth's history.

- You got chatting to your weird cousin at a wedding who told you that all the evidence for climate change has been faked by "Green Conspirators" who stand to profit from the adoption of renewable energy sources.

Subconsciously you will grade each of these three opinions based on the criteria above to form your own view. For example, if you have high trust in the scientific journal and the evidence put forward made a lot of sense to you, then you would weight this argument more heavily in your internal aggregation than that of your cousin. An example of what this might look like is shown below.

In theory, this is a sensible way to make decisions and in some circumstances, I believe it serves us well. We internalise a mix of different views and soberly compensate for any perceived biases. For complicated issues, however, the system breaks down in three key areas of my model:

- Sampling Bias: The subset of views that we come into contact with are often deeply skewed and can give the impression that certain opinions are more widely-held than they really are.

- Misplaced Trust: Our trust in institutions has been eroded by decades of lies, corruption and errors.

- Ease of Persuasion: While we like to think we judge arguments on their merits, as outlined in the section on Information, Chaos and Complexity, some issues are literally inscrutable to us. Even those that aren't must pass through the cognitive biases and flawed reasoning we are all afflicted by.

In the following three sections, I will explain in more detail what these decision-making defects look like, why they exist and how they contribute to our current Post-Truth predicament.

# Sampling Bias

I'll keep this section short because plenty has been written already and I'm getting bored typing it, but social media is like totally an echo chamber dude. Most of the information and opinions that we come into contact with (be that from friends or news sources) are through social media apps, and while this can create the illusion of a representative sample, it is not.

The echo chamber effect is well understood to be a factor in political polarisation, as the content you come across is based purely on the people you follow and an algorithm designed to show you content you will like. If 99% of people posting on Facebook about Hydroxychloroquine are saying that the drug doesn't work, but you only ever interact with the 1% that believe it is a miracle cure for COVID-19, then you are likely to severely overestimate the strength of that argument.

Social media has certainly intensified this effect, but it is not the only arena where it plays out and it didn't start there. At the most basic level, humans tend to surround themselves with like-minded people and social spheres tend not to be very diverse. In many ways, this is understandable (we enjoy the company of people who have similar values and interests to us) but it does lead to a bias in the opinions we come across. For example, if you don't know a single black person, there is a pretty good chance your perspective on Black Lives Matter is skewed.

The other factor here is media ownership. Not everybody gets their news from social media, but if you only read one newspaper (or multiple newspapers owned by the same tycoon) then the echo chamber effect still applies. In Australia, 92% of the market (based on average issue readership) is owned by just four companies, and News Corp (the organisation most enamoured with falsehoods) is far and away the biggest player with 52%.

# Misplaced Trust

Trust in institutions has been declining in western countries for many years, but this should come as no surprise given our history of obscurantism.

# Trust In Government

Is it any wonder that people have no trust in politicians and public figures when they have been caught out lying to their constituents so many times. We now are so desensitised to mendacity and misdirection that we barely notice when leaders like Donald Trump and Scott Morrison unblinkingly trot out falsehoods day after day. These characters have collectively pulled off the equivalent of washing up so badly that you never get asked to do it again. They lie about relatively small things (e.g. the prevalence of African street gangs in Melbourne or the size of the COVID-19 stimulus cheques in America) but even some of the most ludicrous sounding conspiracy theories have turned out to be true:

- In 2001 The Australian Howard government lied about asylum seekers throwing children overboard and sinking their own ship to shore up support for their "strong on borders" policy. It turned out there was no evidence of anybody being thrown overboard and the vessel sunk due to the strain of being towed by the Australian Navy.

- Western governments lied and misrepresented intelligence about "Weapons of Mass Destruction" in Iraq, to justify a war that killed hundreds of thousands of people (it's hard to be specific as they didn't bother counting the Iraqis) and is estimated to end up costing $3 trillion.

- Many reported UFO sightings in the 1980s and 1990s were actually the result of the US military testing weapons. The NSA actively spread disinformation about extraterrestrials to hide advanced technology, and even "leaked" fake internal documents to ufologists.

- The CIA performed dangerous and fatal mind-control experiments on US citizens as part of Project MK Ultra. Some of these involved giving subjects high doses of LSD, electric shocks, sensory deprivation, sexual abuse and other forms of torture.

It's not just the lies themselves that have eroded public trust, neoliberal politicians have spent the last few decades openly criticising big government. This trend was particularly strong under figures like Margaret Thatcher and Ronald Reagan who famously said:

The nine most terrifying words in the English language are: I'm from the Government, and I'm here to help.

# Trust In Experts

We have also seen a decline in trust with respect to research and science, and once again this is regrettably justified.

To begin with we have the corrupting influence of money in many fields of research. Here are a couple of examples:

- In the 1950s there was growing evidence that smoking led to lung cancer. To counteract this, lobbyists set up the Tobacco Industry Research Committee (TIRC) and other bodies to perform studies that muddied the water on this issue and created scientific doubt.

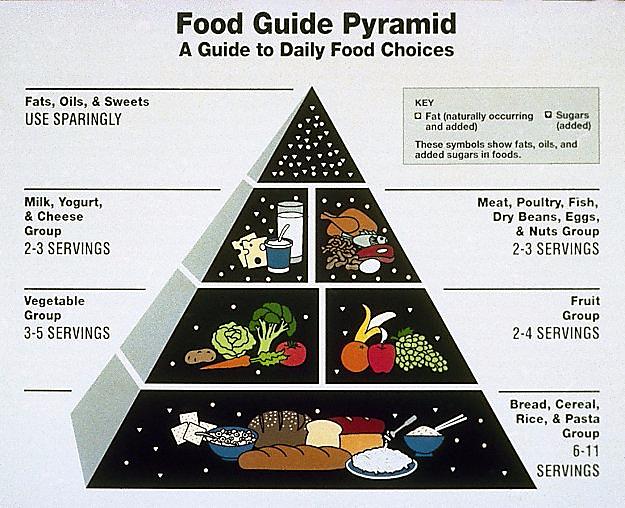

- Plenty of nutritional advice has been manipulated by the food industry over the years, but perhaps the most famous (and egregious) example is that of the Food Pyramid. The 1992 USDA Food Pyramid was disseminated widely (including in schools) but actually provided guidance that ran counter to the latest food science. Powerful food and beverage companies used their influence to shape the Food Pyramid, including reducing the recommended serves of fruit and vegetables and sharply increasing the recommended amount of processed wheat and corn.

Even when research hasn't been directly compromised by bad actors, there are less nefarious elements at play that can still lead to spurious results. In 2015, the Center for Open Science published the findings of their "Reproducibility Project". As part of this project, the participants chose 100 published psychological studies from leading journals and attempted to replicate their results. Of the 100 original studies, 97 claimed to have found statistically significant effects, but in only 35 could these effects be replicated. Even in the 35 successful experiments, the effect size was found to be smaller in most cases. A separate analysis (opens new window) found that approximately $28 billion worth of medical research in the US every year is not reproducible.

Part of the problem here is just experimental design. Many famous studies (most of which are still quoted today) like the Stanford Prison Experiment have been debunked due to laughably poor design decisions. A more fundamental issue, however, is the reliance on and misuse of p-values.

As mentioned previously, you don't "prove" things in science, you just find evidence to support your claims, and central to this way of thinking is "hypothesis testing". When researchers want to test a theory they come up with two hypotheses:

- The Null Hypothesis: A statement indicating that the effect being tested for does not exist.

- The Alternative Hypothesis: A statement indicating that the effect being tested for does exist.

The researcher will then perform their experiment and assess how well the data fits with these two statements. Using statistics, we can calculate the p-value for a given effect which is the probability of observing the data you have collected, if the null hypothesis is true (i.e. just through random variation). If this p-value is below a pre-defined threshold (often 0.05) then the result is deemed statistically significant. Even when the experiment has been designed perfectly, there are still several pitfalls with this methodology:

- Journals Don't Publish Failed Experiments: Most articles that get published are about a statistically significant result, and this is understandable because these tend to be more interesting. What is a more exciting headline: "Crumpets Give You Cancer" or "We Found No Evidence That Crumpets Give You Cancer"? The problem here is that different researchers may repeat the same experiment (since previous failed efforts have not been published) and this can lead to misleading results. Let us assume that crumpets are not carcinogenic, if 100 people perform an experiment looking into this, you would expect to get a statistically significant result in 5 of these experiments through random noise alone (assuming a significance level of 0.05).

- P-Hacking: Another cause of spurious correlations is a phenomenon known as "P-Hacking" (also sometimes called data dredging). Here researchers perform multiple different hypothesis tests until they get a statistically significant result. This is not always done in bad faith and can manifest organically when exploring a dataset but the problem again is that if you run hundreds of different analyses (which is often viable with large datasets) then you will get statistically significant results just through random chance.

Not all of these are existential issues and some solutions have been tabled (e.g. meta-analyses and publishing code and raw data for reproducibility), but the results of flawed analysis and experimentation over the years, linger in the minds of many and has done a lot to undermine the public's faith in science and research.

To return to my model of human decision making, one of the primary ways in which we sort and categorise the torrent of information we are exposed to, is by subconsciously assessing how much we trust each source. After decades of being lied to and misled by our leaders, institutions and private companies, our current paranoid environment is entirely understandable, but arguably even less desirable. Now that admittedly flawed processes like the scientific method and democratic election have been thoroughly debased, we are floating in a sea of information, clinging to individuals and institutions like driftwood (many of whom are even less accountable and reputable than the bodies they replaced).

# Ease of Persuasion

So far we have looked at how sampling biases and the erosion (and misplacement) of trust has led to an environment where misinformation and disinformation thrives. The final component of my model of human decision-making relates to our ability to think critically about the data we come across. Most of us like to think that we are logical beings, capable of appraising arguments on their merits, but the uncomfortable truth is that flat-earthers and the folks that blame 5G for the COVID-19 pandemic think of themselves in the exact same terms.

# Limited Processing Power

As covered earlier, there is a physical limit to how much information humans can process. There are simply not enough hours in the day to critique every single issue that comes across our desk, and this means we are ill-equipped to judge veracity. Secondarily, we face the inherent unpredictability of the many chaotic and complex systems we interact with, which manifests in vehement disagreement, even between subject matter experts. As much as politicians like to appeal to our "common sense", when subjects are beyond rudimentary complexity, this way of thinking falls apart.

It is not only complex systems that confound us either. To go back to Isaac Newton, he famously said in 1675, "if I have seen further it is by standing on the shoulders of giants". Well in 2021 we are all standing on the shoulders of countless generations of giants in a colossus human pyramid, and boy can we see a long way. In all aspects of life, knowledge and culture is built on top of previous work and evolves dynamically. There is always information embedded in the history of a subject (sometimes explicitly and sometimes not), and to comprehend it fully is to traverse this history. This process of refinement and intense specialisation is the reason why when you ask somebody at a party what they do for work, they are likely to respond with a novel series of words like "Global Innovation Consultant", "Communications Change Analyst" or "Digital Experience Evangelist" (apologies if these are real jobs... I just made them up).

To further explore this evolutionary process of ideas, let us briefly move away from the mundane world of public policy and talk about movies. Ludwig Wittgenstein famously stated that:

If a lion could speak, we could not understand him."

Similarly, if you could somehow take a person from the 1800s and show them a modern film, it would be indecipherable to them. The amount of shorthand, genre tropes and references to other movies that are embedded in films, and that we parse subconsciously, is substantial. I remember first becoming aware of western techniques when I started watching foreign films and was often unable to pick up on these kinds of cues. Characters that I thought were going to be villains turned out to be benevolent and plot points I was sure I could see coming a mile off, never eventuated. In short, these films were able to genuinely surprise.

When a set of techniques coalesce and are employed repeatedly, we group them into a genre. There are hints in the way shots are framed, in the way dialogue is constructed, in the music cues, in the editing and in the structure of the narrative that allow the director to impart information implicitly. Consider a movie like Scream (1996), which cleverly subverted the horror genre while at times playing into the genre of comedy. While I imagine it would be possible to follow the story if you had never seen a comedy or horror movie before, there's no question that you would miss significant information and would not entirely (for want of a better phrase) "get it". Without wanting to downplay the film giants on whose shoulders modern directors stand, there is a more complex, rich and intricate web of influences and tropes in today's movies just by way of the natural complicating, evolutionary process we see in every field.

The point I'm trying to make here is that most people are not going to go all the way back and watch every important film since L'Arrivée d'un train en gare de La Ciotat, but those that do will necessarily have a deeper understanding of film-making in the present day. What nobody can do is simultaneously research every subject in this much detail.

# Cognitive Biases

While we like to imagine ourselves dispassionately adjudicating on the validity and accuracy of information, the reality is our perception is often skewed by irrelevant factors such as how interesting that information is or how well it conforms with our existing views. We all house an array of cognitive biases that subconsciously colour our decision making and distort objectivity and rationality. Here are a couple of examples:

- Confirmation Bias: The tendency to search for, interpret, focus on and remember information in a way that confirms one's preconceptions.

- Illusory Truth Effect: A tendency to believe that a statement is true if it is easier to process, or if it has been stated multiple times, regardless of its actual veracity.

- Groupthink: The psychological phenomenon that occurs within a group of people in which the desire for harmony or conformity in the group results in an irrational or dysfunctional decision-making outcome.

Tangentially, most humans have a sizeable blind spot when it comes to numbers. We are particularly bad at conceptualising probabilities, which is something I have written about previously with respect to the COVID-19 pandemic.

# Manipulation

Our limited processing power and cognitive biases open us up to mass manipulation by bad actors - a.k.a. the public relations industry. Whether it is McDonalds selling us burgers with impossibly seductive imagery, Instagram trawling our network of friends for advertising leads, security agents spying on us or politicians running cynical scare campaigns, the famous saying "if you're not paying then you're the product" turns out to be less vacuous than it sounds. There is conflicting evidence around just how malleable our behaviour and desires are, but certainly the modern bombardment of targeted ads, algorithmically curated content and spookily accurate product recommendations have led to the ubiquitous perception (accurate or not) that we are constantly being fucked with.

Even seeing is not believing anymore. The democratisation of photo editing software, CGI and most recently "Deep Fakes" has led to a state of affairs where we can not trust our own eyes. We live in a world now where fantasy is indistinguishable from reality, black-box algorithms trained to sell products curate the information we receive and politicians treat Maslow's Hierarchy of Needs like a propagandist instruction manual. Some degree of paranoia is entirely rational.

# Conclusion (We Probably Didn't Find A Thylacine)

Oh yeah wasn't this supposed to be an article on Tasmanian Tigers? The truth is I have no idea whether these animals are still out there, and given the discombobulating odyssey this piece has turned into, that feels like the appropriate place to land. I am not ruling out the possibility that Thylacine populations existed long after the 1930s, but since I began writing, our friend Neil Waters has released his evidentiary photos and I must admit they are not overly compelling to my untrained eye.

In this article, I have attempted to define and explore our relationship with information and the complex, chaotic and complicated systems that govern our lives. I outlined a simple model of how we form opinions and delved into the three vulnerabilities within this model: sampling bias, low trust in sources and ease of persuasion. I also spent some time discussing the macro psychological and political impacts of our low-trust, post-truth environment.

There are consequential snags at every step in our decision-making process, but here is some unsolicited advice for navigation:

- Learn The Cognitive Biases: We all have many blind spots in our thinking, but the more we understand these biases, the more we can course-correct. I briefly touched on Confirmation Bias and Groupthink in this article, but these are just a couple of many (opens new window). Understanding these biases is infinitely more useful than mastering trigonometry, and should be taught in schools (and that's coming from somebody who does maths for a living).

- Avoid Echo Chambers: Social media is not a good place to get your news and frankly I would avoid anything with a curation/recommendation algorithm. Use an RSS aggregator like Feedly (opens new window) to compile a tailored newsfeed and throw in a few sources that you are ideologically opposed to for good measure.

- Don't Open Your Mind So Much Your Brain Falls Out: It's great to be sceptical but don't turn into a fedora-toting paranoiac bleating at commuters for wearing face masks.

- Show Some Humility: Maybe there really are still Thylacines out there... You are allowed to not have an opinion, and if you haven't spent time engaging with a given subject or find yourself regurgitating quotes from your favourite epic Twitter account, then it might be a sensible course of action. I'm certainly not above mocking morons like Pete Evans and Craig Kelly, but in all honesty, they have likely done substantially more reading on Hydroxychloroquine than I have. For the record, I still think they are wrong on this issue (and practically every other issue) but there have been copious examples in our recent history of widely held views turning out to be erroneous.

While much of the substance of this article has been pessimistic, I will finish by clarifying what I am not saying. I hope that in exploring our relativist predicament I have not inadvertently justified the sophistry of false equivalence. Just because our world is intricate and difficult to understand, does not, ipso facto, render every argument and opinion equally valid. The world is not flat, we really have been to the moon and QANON theories are as ludicrous as they sound. Nothing I have said is an excuse to throw up your hands, it is merely an acknowledgement that these things are difficult. As frustrating and disorientating as the current state of affairs can be, consider for a second how wretched, impoverished and tedious our lives would be if the world was actually as mechanistic and predictable as Newton imagined. There is more beauty in caprice and volatility than you will find in the precise, hellish determinism of a grandfather clock.

If you enjoyed reading this post why not sign up to my MAILING LIST? (opens new window) You'll love it.